Almost two decades ago, the late Robin Williams starred in Bicentennial Man as an NDR-114 robot that was eventually declared a human by the courts. This remains science fiction.

Three years back, Hong Kong-based Hanson Robotics’ Sophia became the first AI-powered robot ever to get citizenship of a country—Saudi Arabia, even though Sophia pales in comparison to the fictional humanoid in Bicentennial Man or those in Surrogates.

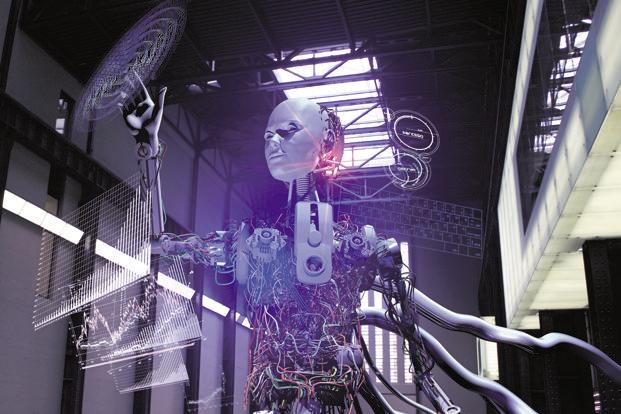

The typical image of robots, though, is that of a machine that can make no mistakes, or can be dangerous like Skynet. However, would humans warm up to social robots more if the latter make, or admit, mistakes?

A social robot is typically an artificial intelligence (AI) system that is designed to interact with humans and other robots.

Researchers at Yale University believe the answer is ‘Yes’. They conducted an experiment in which 153 people were divided into 51 groups, comprising three humans and a robot.

The experiment

Each group played a tablet-based game in which members worked together to build the most efficient railroad routes over 30 rounds. Groups were assigned to one of three conditions characterized by different types of robot behavior.

At the end of each round, the robots either remained silent, uttered a neutral, task-related statement (such as the score or number of rounds completed), or expressed vulnerability through a joke, personal story, or by acknowledging a mistake. Moreover, all the robots occasionally lost a round.

The experiment also demonstrated more equal verbal participation among team members in groups with the vulnerable and neutral robots than among members in groups with silent robots, suggesting that the presence of a speaking robot encourages people to talk to each other in a more even-handed way.

‘I made a mistake’

However, unlike the superhuman androids shown in sci-fi films, these robots acknowledged their errors just like good teammates would do when playing a game. “Sorry, guys, I made the mistake this round,” says the robot. “I know it may be hard to believe, but robots make mistakes too.”

This scenario occurred multiple times during the study of robots’ effects on human-to-human interactions.

The study, published on March 9 in the Proceedings of the National Academy of Sciences, revealed that humans on teams communicated more with each other if they included a robot expressing vulnerability.

They also reported having a more positive group experience than those people with silent robots, or with robots that made neutral statements (like reciting the game’s score), on their teams.

“We know that robots can influence the behavior of humans they interact with directly, but how robots affect the way humans engage with each other is less well understood,” said Margaret L. Traeger, a Ph.D. candidate in sociology at the Yale Institute for Network Science (YINS) and the study’s lead author.

“We are interested in how society will change as we add forms of artificial intelligence to our midst,” said Nicholas A. Christakis, Sterling Professor of Social and Natural Science. “As we create hybrid social systems of humans and machines, we need to evaluate how to program the robotic agents so that they do not corrode how we treat each other.”

Sarah Strohkorb Sebo, a Ph.D. candidate in the Department of Computer Science and a co-author of the study, believes the findings of this study can “inform the design of robots that promote social engagement, balanced participation, and positive experiences for people working in teams”.